1. Introduction

This document is intended for Techila Distributed Computing Engine (TDCE) End-Users who are using R as their main development environment. If you are unfamiliar with the terminology or the operating principles of the TDCE technology, information on these can be found in Introduction to Techila Distributed Computing Engine.

The structure of this document is as follows:

Introduction contains important information regarding the installation of required R packages that enable you to use TDCE with R. This Chapter also contains a brief introduction on the naming convention of the R-scripts and introduces the peach and cloudfor functions, which are used for distributing computations from R to the TDCE environment.

Foreach Backend Examples contains instructions and examples how to use the TDCE foreach backend. The TDCE foreach backend can be used to execute foreach structures in parallel. The backend can also be used with any function that support foreach backends, such as the *ply function family in the plyr package.

Cloudfor Examples contains walkthroughs of code samples that use the cloudfor function. The example material includes code samples on how to control the number of iterations performed in each Job as well as transferring additional data files to the Techila Worker. More advanced examples are also included, which illustrate how to use semaphores and Active Directory (AD) impersonation.

Peach Tutorial Examples contains walkthroughs of simplistic example code samples that use the peach function. The example material illustrates how to control the core features of the peach function, including defining input arguments, transferring data files with the executable program and calling different functions from the R script that is sourced on the Techila Worker. After examining the material in this Chapter you should be able split a simple locally executable program into two pieces of code (Local Control Code and Techila Worker Code), which in turn can be used to perform the computations in the TDCE environment.

Peach Feature Examples contains several examples that illustrate how to implement different features available in R peach. Each subchapter in this Chapter contains a walkthrough of an executable piece of code that illustrates how to implement one or more peach features. Each Chapter is named according to the feature that will be the focussed on. After examining the material in this Chapter you should be able implement several features available in R peach in your own distributed application.

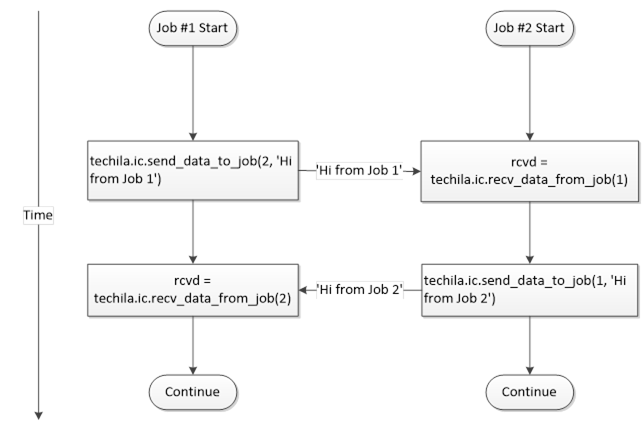

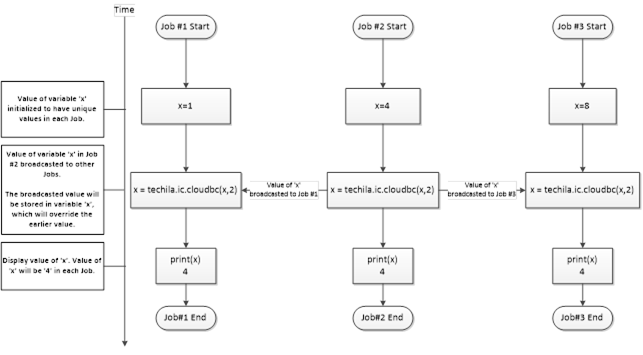

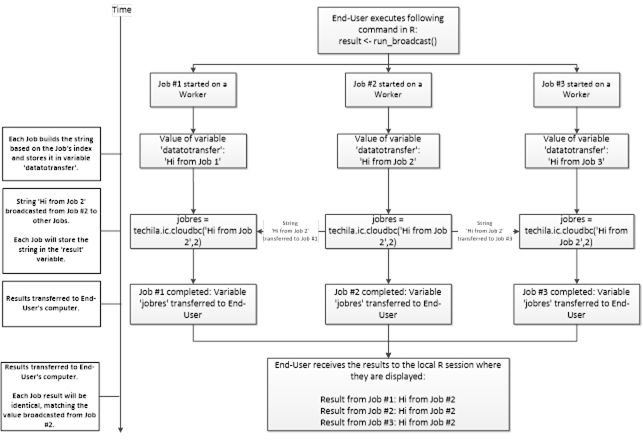

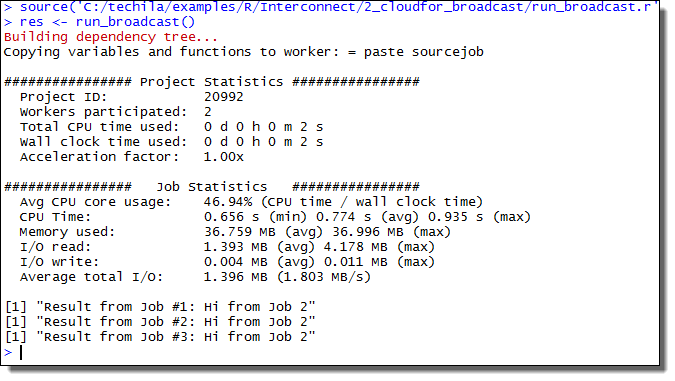

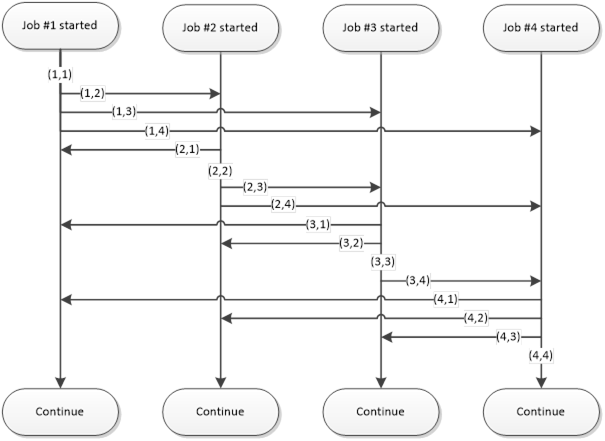

Interconnect contains cloudfor-examples that illustrate how the Techila interconnect feature can be used to transfer data between Jobs in different scenarios. After examining the material in this Chapter, you should be able to implement Techila interconnect functionality when using cloudfor-loops to distribute your application.

Screenshots in this document are from a Windows 7 operating system.

1.1. Installing Required Packages

In order to use the TDCE R API, the following R packages need to be installed:

-

rJava

-

R.utils

-

techila

Note! If your user account does not have sufficient rights to install R packages to the default installation directory, please follow instructions on the following website to change the package installation directory.

1.1.1. Installing the rJava Package

This package can be installed using the following R command:

install.packages("rJava")

After downloading and installing the package, the functions in the rJava package should become accessible from R. You can verify that the installation procedure was successful by loading the package with the following R command:

library(rJava)

If the installation has failed, please ensure that the following environment variables are set correctly:

-

JAR

-

JAVA

-

JAVAC

-

JAVAH

-

JAVA_HOME

-

JAVA_LD_LIBRARY_PATH

-

JAVA_LIBS

-

JAVA_CPPFLAGS

1.1.2. Installing the R.utils Package

This package can be installed using the following R command:

install.packages("R.utils")

After downloading and installing the package, the functions in the R.utils package should become accessible from R. You can verify that the installation procedure was successful by loading the package with the following R command:

library(R.utils)

1.1.3. Installing the techila Package

The techila package is included in the Techila SDK and contains TDCE R commands.

Please follow the steps below to install the techila package. The appearance of screens may vary, depending on your R version, operating system and display settings.

-

Launch R. After launching R, the R Console will be displayed.

Figure 1. R command window

Figure 1. R command window -

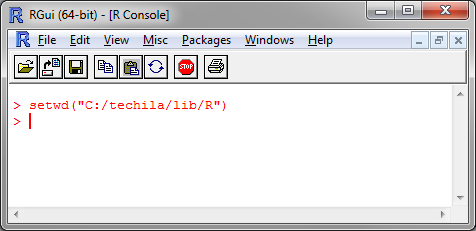

Change your current working directory to the R directory in the Techila SDK.

Figure 2. Changing the current working directory.

Figure 2. Changing the current working directory. -

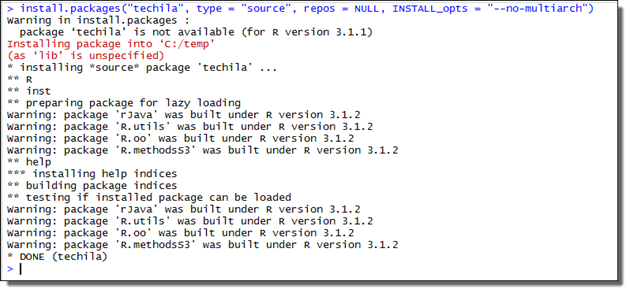

Install the

techilapackage using the following command:install.packages("techila", type = "source", repos = NULL, INSTALL_opts = "--no-multiarch") Figure 3. Installing without multiarch.

Figure 3. Installing without multiarch.The

techilapackage is now ready for use. You can verify that the package was installed correctly by loading the package with the following command:library(techila)

Note! Depending on your R-version, certain functions might masked by the functions in the other required packages. If you wish to use a masked function from a specific package, this can be achieved with

<package>::<function>notation.After loading the

techilapackage you can display thepeachandcloudforhelp using commands:?peach ?cloudfor

1.2. Updating the techila Package

This Chapter contains instructions for updating the techila package. These steps will need to be performed when upgrading to a newer Techila SDK version.

-

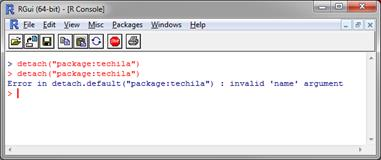

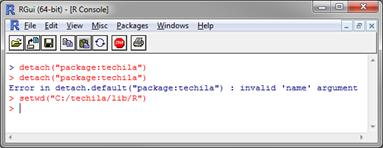

Detach the old

techilapackage using command:detach("package:techila")If the

techilapackage not loaded when the command above is executed, you might receive a corresponding error message. You can ignore this error message and continue with the update process. Figure 4. Detaching the package.

Figure 4. Detaching the package. -

Change your current working directory in R to the

<full path>/techila/lib/R Figure 5. Changing current working directory.

Figure 5. Changing current working directory. -

Install the new

techilapackage using command:install.packages("techila", type = "source", repos = NULL, INSTALL_opts = "--no-multiarch")The

techilapackage has now been updated.

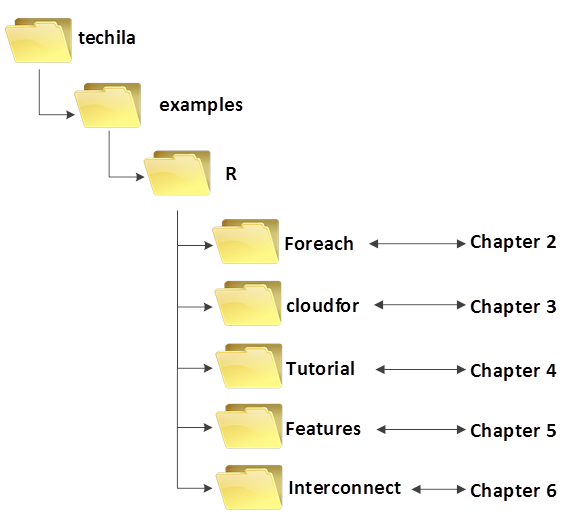

1.3. Example Material

The R scripts containing the example material discussed in this document can be found in the Foreach, Tutorial, Features, cloudfor and Interconnect folders in the Techila SDK. These folders contain subfolders, which further contain the actual R scripts that can be used to run the examples. Foreach Backend Examples contains walkthroughs of code samples that use the TDCE foreach backend. Cloudfor Examples contain examples for the cloudfor function. Peach Tutorial Examples and Peach Feature Examples contain walkthroughs of code samples that use the peach function. Interconnect contains walkthroughs of examples that use the Techila interconnect feature to transfer interconnect data packages between Jobs.

1.4. Naming Convention of the R Scripts

The typical naming convention of R scripts presented in this document is explained below:

-

R scripts ending with

distcontain the Techila Worker Code, which will be distributed to the Techila Workers when the Local Control Code is executed. -

R scripts beginning with

run_contain the Local Control Code, which will create the computational Project when executed locally on the End-User’s own computer. -

R scripts beginning with

local_contain locally executable code, which does not communicate with the TDCE environment.

Please note that some R scripts and functions might be named differently, depending on their role in the computational Project.

1.5. R Foreach Backend

The techila package includes a foreach backend. After registering the backend, operations inside foreach structures can be pushed to the TDCE environment by using the %dopar% notation.

The backend can be registered with the following commands:

library(techila)

registerDoTechila()After registering the command, operations in foreach structures can be executed by using the %dopar% notation as illustrated in the example code snippet below. When executed, this example code snippet would create a Project consisting of three Jobs. Each Job would calculate the square root of the loop counter value.

library(techila)

library(foreach)

registerDoTechila()

result <- foreach(i=1:3) %dopar%

{

sqrt(i)

}When registering the backend with the registerDoTechila function, additional parameters can be used to add or modify functionality.

The default behavior of the Techila foreach backend will execute one iteration in each Job. This means that if you have 1000 iterations, the Project will contain 1000 Jobs. If these iterations are computationally light (in the range of a second or two per iteration), you can improve performance by grouping several iterations into each Job by using the steps parameter. For example, the following syntax could be used to define that 10 iterations would be performed in each Job, reducing the number of Jobs in the Project to 100.

library(techila)

registerDoTechila(steps=10)

result <- foreach(i=1:1000) %dopar%

{

sqrt(i)

}In situations where your computations have dependencies to R packages that are not included in the standard R distribution in your computations, you can mark them for transfer by using the packages parameter. The example below could be used to transfer pracma and gbm packages from the End-User’s computer to the Techila Workers.

library(techila)

registerDoTechila(packages=list("pracma","gbm"))It is also possible to use perfectly nested foreach loop structures. In perfectly nested loop structures, all content is inside the innermost loop as illustrated in the code snippet below.

library(techila)

library(foreach)

registerDoTechila()

foreach(b=1:4, .combine=`cbind`) %:%

foreach(a=1:3) %dopar% {

a* b

}The foreach backend also enables computations from other functions that use the foreach backend to be executed in TDCE. This includes functionality in e.g. the plyr and the caret packages. The code snippets below illustrate how TDCE can be used with the ddply function from the plyr package (Example 1) and the train function from the caret package (Example 2).

Example 1: plyr ddply

library(techila)

library(plyr)

registerDoTechila()

res <- ddply(iris, .(Species), numcolwise(mean) ,.parallel = TRUE))Example 2: caret train

# Load the packages needed in the example

library(mlbench)

library(techila)

library(caret)

registerDoTechila(packages=list("gbm","e1071"), # Additional packages needed on Workers..

steps=10) # Defines how many loops are done in each Job.

data(Sonar)

inTraining <- createDataPartition(Sonar$Class, p = .75, list = FALSE)

training <- Sonar[ inTraining,]

testing <- Sonar[-inTraining,]

# 10-fold CV

fitControl <- trainControl(

method = "repeatedcv",

number = 10,

repeats = 10)

# Compute in TDCE

result <- train(Class ~ ., data = training,

method = "gbm",

trControl = fitControl,

verbose = FALSE)1.6. R Peach Function

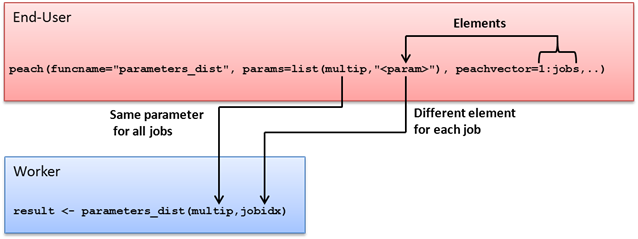

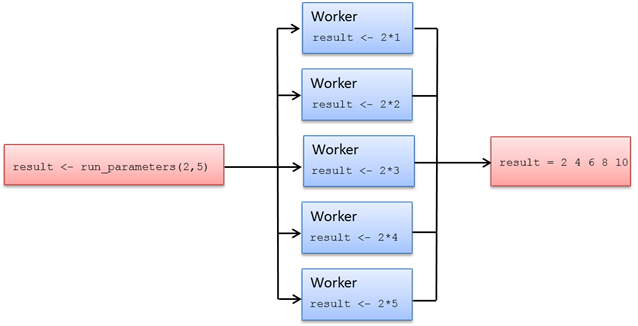

The peach function provides a simple interface that can be used to distribute even the most complex programs. When using the peach function, every input argument is a named parameter. Named parameters refer to a computer language’s support for function calls that clearly state the name of each parameter within the function call itself.

A minimalistic R peach syntax typically includes the following parameters:

-

funcname

-

params

-

files

-

peachvector

-

datafiles

Using these parameters, the End-User can define input parameters for the executable function and transfer additional files to the Techila Workers. An example of a peach function syntax using these parameters is shown below:

peach(funcname="name_of_the_function_that_will_be_called",

params=list(variable_1,variable_2),

files=list("R_script_that_will_be_sourced.R"),

datafiles=list("file_1"),

peachvector=1:jobs)Tutorial examples on the use of these parameters can be found in Peach Tutorial Examples. General information on available peach parameters can also be displayed by executing the following commands in R.

library(techila)

?peach1.7. R Cloudfor Function

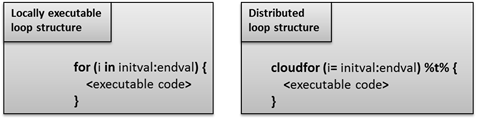

The cloudfor function provides an even more simplistic way to distribute computationally intensive for-loop structures to the TDCE environment. The cloudfor function is based on the peach function, which means that all peach features are also available in cloudfor.

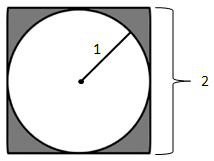

The loop structure that will be distributed and executed on Techila Workers is marked by replacing the for-loop with a cloudfor-loop. In addition, the syntax for defining the loop iterations is slightly modified as illustrated in the image below.

for-loop structures to cloudfor-loop structures enables you to execute the computationally intensive operations in the Techila Distributed Computing Engine environment.The <executable code> notation in the example above represents the algorithm that will be executed during each iteration of the loop structure.

The iteration interval in the cloudfor version is given as a vector ranging from initval to endval. These variables to the same values as in the locally executable for-loop, representing the start and end values for the loop iterations.

The %t% notation in the cloudfor version defines that the following code block enclosed in curly brackets should be executed in the TDCE environment. When using multiple cloudfor-loops, the outer cloudfor-loops are defined with a %to% notation and the %t% notation is used do define the innermost cloudfor-loop. A code sample illustrating multiple nested loops can be found later in this Chapter.

Please note that iterations of the cloudfor-loop might be performed different Techila Workers, meaning all computational operations must also be independent. For example, the conversion shown below is possible, because all the iterations are independent.

| Locally Executable | Distributed Version |

|---|---|

|

|

But it is NOT possible to convert the loop structure shown below. This is because the value of A in the current iteration (e.g. i=3) depends on the value of the previous iteration (i=2).

| Locally Executable | Distributed Version |

|---|---|

|

Conversion NOT Possible. Recursive dependency in the local |

When the cloudfor keyword is encountered, all variables and functions that are required to execute the code on the Techila Worker are automatically transferred and made available on the Techila Worker.

The number of Jobs in the Project will be automatically set by evaluating the execution time of iterations locally. In cases where the execution of a single iteration is short, multiple iterations will be performed in each Job. If the execution time of a single iteration is long (by default more than 20 seconds), one iteration will be performed in each Job. The number of iterations performed in a single Job can also be controlled with the .steps control parameter as shown below.

A <- cloudfor(i=1:10,.steps=2) %t% {

<executable code>

}In the example above, two iterations would be performed in each Job. This would create a Project containing five (5) Jobs, because the maximum value of the loop counter is ten (10).

Because cloudfor is based on peach, you can also use peach parameters by prepending the name of the parameter with a dot (.<peach parameter>).This is illustrated in the syntax below, where the streaming feature has been enabled with the .stream parameter.

A <- cloudfor(i=1:10,.steps=2,

.stream=TRUE) %t% {

<executable code>

}It is also possible to distribute perfectly nested loop structures. In perfectly nested for-loops, all content is inside the innermost for-loop. This means that if you have a locally executable perfectly nested for-loop structure, you can distribute the computations to the TDCE environment by marking the executable code as shown below.

A <- cloudfor (i = 1:10) %to%

cloudfor (j = 1:10) %t% {

<executable code>

}When using multiple, perfectly nested cloudfor-loops, the outer loops are defined with the %to% notation. The innermost cloudfor-loop is defined with the %t% notation, which also indicates that the code in the following curly brackets should be executed in the TDCE environment.

In situations where you have several, perfectly nested cloudfor-loops, only the innermost loop is marked with the %t% notation. All other loops are marked with %to%. This is illustrated below:

A <- cloudfor (i = 1:10) %to%

cloudfor (j = 1:10) %to%

cloudfor (k = 1:10) %t% {

<executable code>

}It is also possible to evaluate regular for-loop structures inside cloudfor-loops. For example, the syntax shown below would evaluate the innermost for-loop (j in 1:10) in each Job.

A <- cloudfor (i = 1:10) %t% {

<executable code 1>

for (j in 1:10) {

<executable code 2>

}

<executable code 3>

return(<your result data>) # This will be returned from each Job.

}However, it is NOT possible to use cloudfor-loops on the same level when inside a cloudfor-loop.

A <- cloudfor (i = 1:10) %to%

cloudfor (j = 1:10) %t% {

<executable code>

}

cloudfor (k = 1:10) %t% {

<more executable code>

}General information on available control parameters can also be displayed by executing the following command in R.

library(techila)

?cloudfor

?peachPlease note that cloudfor-loops should only be used to divide the workload in computationally expensive for-loops. If you have a small number of computationally light operations, using a cloudfor-loop will not result in better performance.

As an exception to this rule, some of examples discussed in this document will be relatively simple, as they are only intended to illustrate the mechanics of using the cloudfor function.

1.8. Process Flow

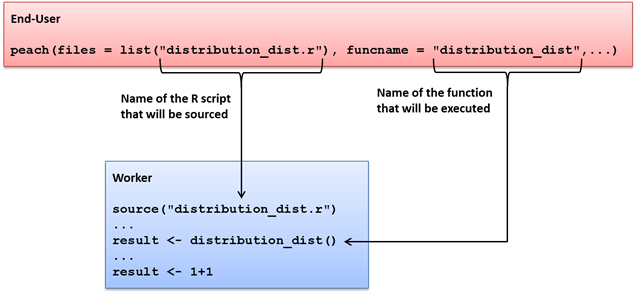

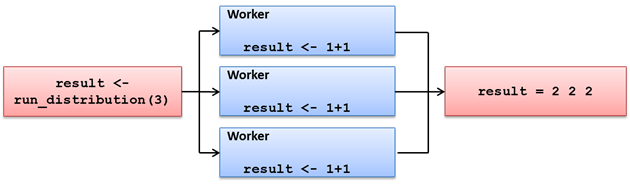

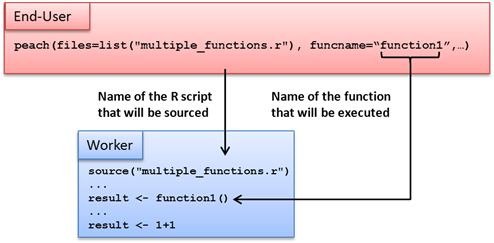

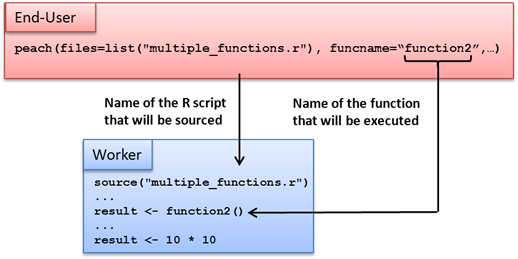

When a Project is created with peach or cloudfor, each Job in a computational Project will have a separate R workspace. Functions and variables are loaded the preliminary stages of each computational Job by sourcing the R-files defined in the files parameter (when using peach) and by loading the parameters stored in the techila_peach_inputdata file.

When a Job is started on a Techila Worker, the peachclient.r script (included in the techila package) is called. The peachclient.r file is an R script that acts as a wrapper for the Techila Worker Code and is responsible for transferring parameters to the executable function and for returning the final computational results. This functionality is hidden from the End-User. The peachclient.r will be used automatically by computational Projects created with peach or cloudfor.

The peachclient.r wrapper also sets a preliminary seed for the random number generator by using the R set.seed() command. Each Job in a computational Project will receive a unique random number seed based on the current system time and the jobidx parameter. The preliminary random number seeding can be overridden by calling the set.seed() function in the Techila Worker Code with an appropriate random seed.

1.8.1. Peach Function

The list below contains some of the R specific activities that are performed automatically when the peach function is used to create a computational Project.

-

The

peachfunction is called locally on the End-Users computer -

R scripts listed in the

filesparameter are transferred to Techila Workers -

Files listed in the

datafilesparameter are transferred to Techila Workers -

The

peachclient.rfile is transferred to Techila Workers -

Input parameters listed in the

paramsparameter are stored in a file calledtechila_peach_inputdata, which is transferred to Techila Workers. -

The files listed in the

filesanddatafilesparameters and the filestechila_peach_inputdataandpeachclient.rare copied to the temporary working directory on the Techila Worker -

The

peachclient.rwrapper is called on the Techila Worker. -

Variables stored in the file

techila_peach_inputdataare loaded to the R Workspace -

Files listed in the

filesparameter are sourced using the Rsourcecommand -

The

<param>notation is replaced with apeachvectorelement -

The peachclient calls the function defined in the

funcnameparameter with the input parameters -

The peachclient saves the result in to a file, which is returned from the Techila Worker to the End-User

-

The

peachfunction reads the output file and stores the result in a list element (If a callback function is used, the result of the callback function is returned). -

The entire list is returned by the

peachfunction.

1.8.2. Cloudfor Function

The list below contains some of the R specific activities that are performed automatically when using the cloudfor function to create a computational Project.

-

The innermost

cloudforloop (defined by %t%) is encountered in the End-Users local R code -

Execution time required for a loop iteration is estimated

-

The code block within the innermost

cloudfor-loop is stored in the filetechila_peach_inputdata. -

Additional functions and workspace variables required when executing the Techila Worker Code are stored in the

techila_peach_inputdatafile, which is transferred to the Techila Workers. -

The

peachclient.randtechila_for.rfiles are transferred to the Techila Workers -

The

peachclient.rwrapper is called on the Techila Worker -

The peachclient loads the variables and functions stored in the

techila_peach_inputdatafile to the workspace -

The peachclient calls the

techila_for.rwrapper for the specified number of iterations -

The

techila_for.rwrapper executes the code block each time it is called -

Results from the loop iterations are saved in list form to an output file, which is returned from the Techila Worker

-

Output files are read on the End-Users computer and results are stored as list elements

-

The entire list is returned as the result

2. Foreach Backend Examples

This Chapter contains examples on how to use the Techila Distributed Computing Engine (TDCE) foreach backend to execute computations in a TDCE environment. The example material discussed in this Chapter, including R scripts and data files can be found in the subdirectories under the following folder in the Techila SDK:

techila\examples\R\Foreach

2.1. Executing Foreach Computations in Techila Distributed Computing Engine

This example shows how to use the foreach backend to execute computations in a TDCE environment by using the foreach %dopar% notation

The material discussed in this example is located in the following folder in the Techila SDK:

techila\examples\R\Foreach\foreach

Note! In order to run this example, the foreach package needs to be installed.

Before you are able to use the TDCE foreach backend, you will need to load the TDCE library and register the backend with the following R commands:

library(techila)

registerDoTechila(sdkroot = "<path to your `techila` directory>")The notation in <> needs to be replaced with the location of your Techila SDK’s techila directory. For example, if your Techila SDK is located in C:/techila, then you could register the backend with the following syntax.

registerDoTechila(sdkroot = "C:/techila")

After registering the TDCE foreach backend, computational operations in foreach structures can be executed in a TDCE environment by using the %dopar% notation as shown in the example snippet below.

result <- foreach(i=1:5) %dopar%

{

i*i

}The example code snippet above would create a Project consisting of five Jobs. Each Job execute one iteration of the foreach loop structure. The result would be stored in the result variable in list format.

In situations where the computational operations performed in a single iteration are computationally light, it would be inefficient to create one Job for each iteration. A more efficient implementation can be done by using the .steps parameter to define a suitably large number of iterations for each Job. This is illustrated in the code snippet below.

result <- foreach(i=1:10000, .options.steps=5000) %dopar%

{

i*i

}The example code snippet above consists of 10000 iterations. The example code snippet also defines that 5000 iterations should be executed in each Job. This means that when the example code snippet is executed, it would create a Project consisting of two Jobs, where each Job would compute 5000 iterations.

All parameters available for peach can also be used with foreach. The general syntax for defining parameters is:

.options.<peach parameter>

For more information about available peach parameters, please see:

?peach

The TDCE foreach backend also supports using the foreach .combine option to control how the results are managed.

2.1.1. Foreach example walkthrough

The foreach example included in the Techila SDK is shown below:

# Example documentation: http://www.techilatechnologies.com/help/r_foreach_foreach

# Copyright Techila Technologies Ltd.

# Uncomment to install required packages if required.

# install.packages("iterators")

# install.packages("foreach")

run_foreach <- function() {

# This function registers the Techila foreach backend and uses the %dopar%

# notation to execute the computations in parallel, in the Techila

# environment.

#

# Example usage:

#

# source('run_foreach.r')

# res <- run_foreach()

# Load required packages

library(techila)

library(foreach)

# Register the Techila foreach backend and define the 'techila' folder

# location.

registerDoTechila(sdkroot = "../../../..")

iters=10

# Create the Project using foreach and %dopar%.

result <- foreach(i=1:iters,

.options.steps=2, # Perform 2 iterations per Job

.combine=c # Combine results into numerical vector

) %dopar% { # Execute computations in parallel

sqrt(i) # During each iteration, calculate the square root value of i

}

# Print and return results.

print(result)

result

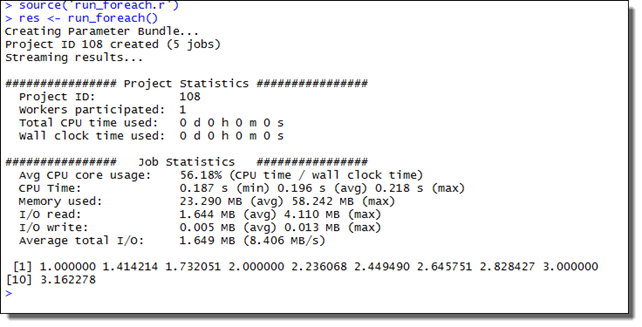

}This example will create a Project consisting of five Jobs. The code starts by loading the required packages: techila and foreach.

After this, the TDCE foreach backend is registered and the Techila SDK’s techila directory location is defined.

The foreach syntax used to perform the computations in TDCE starts by defining that the computational result should be stored in variable result and that the number of iterations should range from 1 to 10.

Each Job will perform two iterations. Because the total number of iterations was set to 10, this means the Project will consist of five Jobs.

The results will be combined with the c operator. This means that the results will be returned as a numerical vector, instead of a list.

The %dopar% notation will push the computations to the TDCE environment. (If you would change this to %do%, the operations would be executed sequentially on your computer.)

The code that will be executed in each iterations is quite trivial, consisting of simply calculating the square root of the loop counter i.

After the Project has been completed, the results will be returned from the TDCE environment and printed to the R console on your computer.

2.1.2. Creating the Project

To create the computational Project, change your current working directory in your R environment to the directory that contains the example material relevant to this example.

After having browsed to the correct directory, you can source the Local Control Code using command:

source("run_foreach.r")

After having sourced the file, create the computational Project using command:

res <- run_foreach()

This will create a Project consisting of five Jobs, each Job performing two iterations of the foreach loop structure. The example screenshot below illustrates what the expected output looks like.

2.2. Using the Techila Distributed Computing Engine Foreach Backend with Plyr Functions

The material discussed in this example is located in the following folder in the Techila SDK:

techila\examples\R\Foreach\plyr

Note! In order to run this example, the foreach and plyr packages needs to be installed.

When using functions from the plyr package to perform computations, the .parallel option can be used to perform computations in parallel, using the backend provided by foreach. This means that after registering the TDCE foreach backend, computations can be executed in TDCE with the .parallel option.

Note! In order to the TDCE backend with functions in the plyr package, the plyr package will need to be transferred to the Techila Workers using the .packages parameter. The plyr package contains platform specific files (.dll for Windows and .so for Linux), meaning the Techila Workers must have the same operating system as the one you are using on your R workstation. In other words, if you are using a Windows computer, the Techila Workers must also have a Windows operating system.

2.2.1. Executing plyr functions in parallel

In order to execute function from the plyr package in parallel using TDCE, the following packages need to be loaded: techila and plyr. After loading the packages, the TDCE backend can be registered using the syntax illustrated below

library(techila)

library(plyr)

registerDoTechila(sdkroot = "<path to your `techila` directory>")The notation in <> needs to be replaced with the location of your techila directory. For example, if your Techila SDK is located in C:/techila, then you could register the backend with the following syntax.

registerDoTechila(sdkroot = "C:/techila")

After registering the TDCE backend, functions from the plyr package can be executed in a TDCE environment by setting .parallel=TRUE as shown in the example snippet below.

res <- aaply(ozone,

1,

mean,

.parallel=TRUE,

.paropts=list(.options.packages=list("plyr")))The array ozone is included in the plyr package and is a 24 x 24 x 72 numeric array. The code snippet above would calculate the average value for each row in the ozone array in a separate Job, meaning the Project would consist of 24 Jobs. The plyr package has been transferred to the Techila Workers by using the .packages parameter.

2.2.2. Example walkthrough

This example illustrates how the computations performed with ddply can be executed in parallel in a TDCE environment. The example uses the iris data frame, which is included in the plyr package. The code for the example in the Techila SDK is shown below:

# Example documentation: http://www.techilatechnologies.com/help/r_foreach_plyr

# Copyright Techila Technologies Ltd.

#

# Uncomment to install required packages if required.

# install.packages("plyr")

# install.packages("iterators")

# install.packages("foreach")

run_ddply<- function() {

# This function registers the Techila foreach backend and uses the

# .parallel option in ddply to execute computations in parallel,

# in the Techila environment.

#

# Example usage:

#

# source('run_ddply.r')

# res <- run_ddply()

# Load required packages.

library(techila)

library(plyr)

# Register the Techila foreach backend and define the 'techila' folder

# location.

registerDoTechila(sdkroot = "../../../..")

# Create the computational Project using ddply with the .parallel=TRUE option.

result <- ddply(iris, # Split this data frame

.(Species), # According to the values in the Species column

numcolwise(mean), # And perform this operation on the column data.

.parallel=TRUE # Process the computations in Techila

)

# Print and return results

print(result)

result

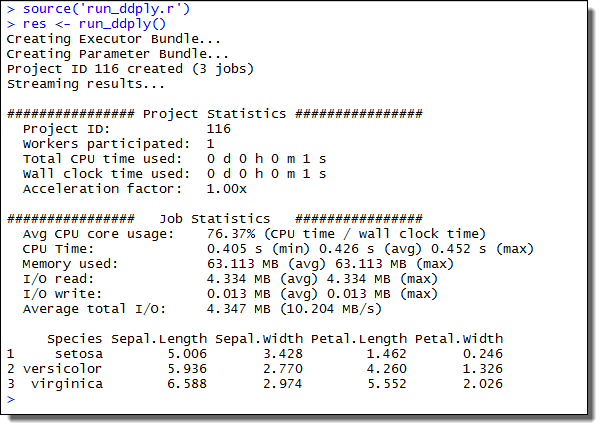

}The code starts by loading the required packages: techila and plyr. After loading the packages, the TDCE foreach backend is registered and the Techila SDK’s techila directory location is defined.

The operation will be performed on data frame iris, which will be split into parts according values in Species . The operation numcolwise(mean) will be executed for each data frame part. The computations are marked for parallel execution and the required plyr package will be transferred to all participating Techila Workers.

The iris data frame contains three unique values in the Species column and the data for each Species will be processed in a single Job. This means that the Project will consist of three Jobs.

2.2.3. Creating the Project

To create the computational Project, change your current working directory in your R environment to the directory that contains the example material relevant to this example.

After having browsed to the correct directory, you can source the Local Control Code using command:

source("run_ddply.r")

After having sourced the file, create the computational Project using command:

res <- run_ddply()

This will create a Project consisting of three Jobs, each Job processing the data for one Species. The example screenshot below illustrates what the expected output looks like.

3. Cloudfor Examples

This Chapter contains walkthroughs of the example material that uses the cloudfor function included in the Techila SDK. The examples in this Chapter highlight the following subjects:

-

Controlling the Number of Iterations Performed in Each Job

-

Transferring Data Files

-

Managing Streamed Results

The example material used this Chapter, including R-scripts and data files can be found in the subfolders under the following folder in the Techila SDK:

techila\examples\R\cloudfor\<example specific subfolder>

Please note that the example material in this Chapter is only intended to highlight some of the available features in cloudfor. For a complete list of available control parameters, execute the following command in R.

library(techila)

?cloudfor3.1. Controlling the Number of Iterations Performed in Each Job

This example is intended to illustrate how to convert a simple, locally executable for-loop structure to a cloudfor-loop structure. Executable code snippets are provided of a locally executable loop structure and the equivalent cloudfor implementation. This example also illustrates on how to control the number of iterations performed during a single Job.

The material used in this example is located in the following folder in the Techila SDK:

techila\examples\R\cloudfor\1_number_of_jobs

When using cloudfor to distribute a loop structure, the maximum number of Jobs in the Project will be automatically limited by the number of iterations in the loop structure. For example, the loop structure below contains 10 iterations, meaning that the maximum number of Jobs in the Project would be 10.

cloudfor(counter=1:10) %t% {

<executable code>

}By default, cloudfor will estimate the execution time of iterations locally on the End-Users computer. This is done by executing the code block (as represented by the <executable code> notation) for a minimum of one second. Based on the number of iterations performed during this estimation, each Job will be assigned a suitable number of loop iterations so that each Job will last for a minimum of 20 seconds.

If no iterations have been completed within one second, the evaluation will continue for a maximum of 20 seconds. If no iterations have been completed after evaluating the code block for 20 seconds, the number of iterations in each Job will be set to one (1).

If you require more control over the number of iterations that will be performed in each Job, this can be achieved by using the .steps control parameter. The general syntax for using this control parameter is shown below:

cloudfor(counter=1:10,.steps=<iterations>) %t% {

<executable code>

}The <iterations> notation can be used to define the number of iterations that should be performed in each Job. For example, the syntax shown below would define that each two iterations should be performed in each Job.

cloudfor(counter=1:10,.steps=2) %t% {

<executable code>

}Please note that when using the .steps parameter, you will also fundamentally be defining the length of a single Job. If you only perform a small number of short iterations in each Job, the Jobs might be extremely short, resulting poor overall efficiency. It is strongly advised to use values that ensure the execution time of a Job will not be too short.

3.1.1. Locally executable program

The locally executable program used in this example is shown below.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_1_number_of_jobs

# Copyright Techila Technologies Ltd.

local_function <- function(loops) {

# This function will be executed locally on your computer and will not

# communicate with the Techila environment.

#

# Example usage:

#

# loops <- 100

# result <- local_function(loops)

result <- rep(0, loops) # Create empty array for results

for (i in 1:loops) {

result[i] = i * i # Store result in array

}

result

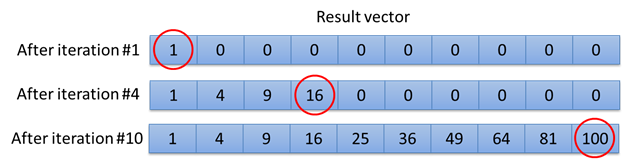

}The code contains a single for-loop, which contains a single multiplication operation where the value of the i variable is squared. The value of the i variable will be replaced with the iteration number, which will be different each iteration. The result of the multiplication will be stored in the result vector at the index determined by the value of the i variable.

The locally executable program can be executed by changing your current working directory in R to the directory containing the material for this example and executing the command shown below:

source("local_function.r")

result <- local_function(10)Executing the command shown above will calculate 10 iterations. The values stored in the result-array are shown in the image below.

i.3.1.2. The cloudfor version

The cloudfor version of the locally executable program is shown below.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_1_number_of_jobs

# Copyright Techila Technologies Ltd.

library(techila)

run_jobs <- function(loops) {

# This function contains the distributed version, where operations inside the

# loop structure will be executed on Workers.

#

# Example usage:

#

# loops <- 100

# result <- run_jobs(loops)

result <- cloudfor (i=1:loops,

.sdkroot="../../../..", # Path of the techila folder

.steps=2 # Perform two iterations per Job

) %t% { # Start of code block that will be executed on Workers

i * i # This operation will be performed on the Workers

} # End of code block executed on Workers

}The command library(techila) will be executed when the file is sourced. After executing the command, the functions in the techila-package will be available.

The for-loop in the locally executable version has been replaced with a cloudfor-loop. The %t% notation after the cloudfor-loop defines that the code inside the following curly brackets should be executed in the Techila Distributed Computing Engine (TDCE) environment. In this example, the executable code block only contains the operation where the value of the i variable is squared.

The .sdkroot control parameter is used to define the location of the techila directory. In this example, a relative path definition has been used. This definition will be used in all of the R example material in the Techila SDK.

The .steps control parameter is used to define that two iterations should be calculated in in each Job. This means that for example if the number of loops is set to 10, the number of Jobs will be 5 (number of loops divided by the value of the steps parameter).

3.1.3. Creating the computational project

The computational Project can be created by executing the cloudfor version of the program. The cloudfor version can be executed by changing your current working directory in R to the directory containing the material for this example and executing the command shown below:

source("run_jobs.r")

result<-run_jobs(10)After you have executed the command, the Project will be automatically created and will consist of five (5) Jobs. These Jobs will be assigned and computed on Techila Workers in the TDCE environment. Each Job will compute two iterations of the loop structure and will return a list containing two values returned from the loop evaluations. This list will be stored in an output file, which will be automatically transferred to the Techila Server.

After all computational Jobs have been completed the result files will be transferred to your computer from the Techila Server. The values stored in the output files will be read and stored in the result-array. This array will contain all the values from the iterations and will correspond to the output generated by the locally executable program discussed earlier in this Chapter.

3.2. Transferring Data Files

This example illustrates how to transfer data files to the Techila Workers. This example uses two different data file transfer methods:

-

Transferring common data files required on all Techila Workers

-

Transferring Job-specific data files

The material used in this example is located in the following folder in the Techila SDK:

techila\examples\R\cloudfor\2_transferring_data_files

Data files that are required on all Techila Workers can be transferred to Techila Workers with the .datafiles control parameter. All transferred data files will be copied to the same temporary working directory with the Techila Worker Code.

For example, the following syntax would transfer a file called file1 to all participating Techila Workers.

.datafiles=list("file1")

Several files can be transferred by entering the names of the files as a comma separated list. For example, the following syntax would transfer files called file1 and file2 to all participating Techila Workers

.datafiles=list("file1","file2")

The syntaxes shown above assume that the files are located in the current working directory. To specify a different location for a file, prepend the file name with the path of the file. For example, the syntax shown below would retrieve file1 from the current working directory and file2 from the directory C:/temp.

.datafiles=list("file1","C:/temp/file2")

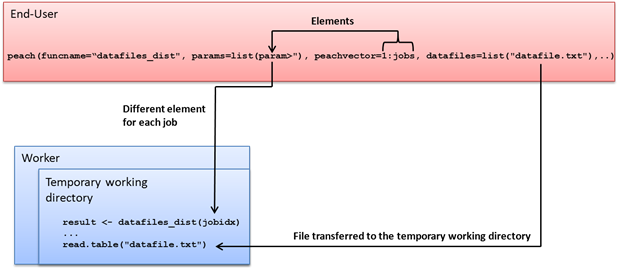

Job-specific input files can be used in situations where only some files of a data set are required during any given Job. The Job-specific input files feature can be used with the .jobinputfiles control parameter. The general syntax for defining the control parameter is shown below.

.jobinputfiles=list(

datafiles = list(<comma separated list of file names>),

filenames = list(<name(s) of the Job-specific input file(s) on the Worker>)

)Note! When using Job-specific input files, the number of files listed in the datafiles parameter must be equal to the number of Jobs in the Project. This means that the use of the .steps control parameter is typically required for ensuring that the Project contains a correct number of Jobs.

An example syntax is shown below.

result <- cloudfor(i=1:2,

.steps=1,

.jobinputfiles=list(

datafiles = list("file1","file2"),

filenames = list("input.data"))) %t% {

<executable code>

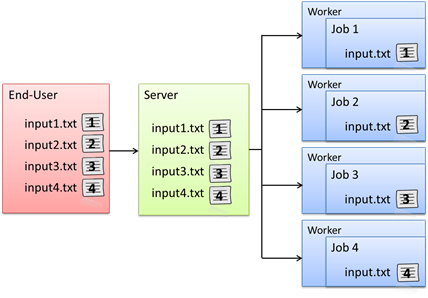

}In the example above, the value of the .steps parameter is set to one (1), which means that one (1) iteration will be performed in each Job. As the total number of iterations in the loop structure is two (2), this ensures that the Project will contain two (2) Jobs. Setting the number of Jobs to two (2) is required because the number of Job-specific input files is also two (2). File file1 will be transferred to Job 1 and file file2 will be transferred to Job 2. After the files have been transferred to the Techila Workers, each file will be renamed to input.data.

Information on how to define multiple Job-specific input files can be found in Job Input Files.

3.2.1. Locally executable program

The locally executable program used in this example is shown below.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_2_transferring_data_files

# Copyright Techila Technologies Ltd.

local_function <- function() {

# This function will be executed locally on your computer and will not

# communicate with the Techila environment.

#

# Usage:

#

# result <- local_function()

# Read values from the data files

values <- read.table("datafile.txt", header=TRUE, as.is=TRUE)

targetvalue <- read.table("datafile2.txt", header=TRUE, as.is=TRUE)

# Determine the number of rows and columns of data

rows <- nrow(values)

cols <- ncol(values)

# Create empty matrix for results

result <- matrix(rep(NA, 12), rows, cols)

for (i in 1:rows) { # For each row of data

data <- values[i,] # Read the values on the row

for (j in 1:cols) { # For each element on the row

# Compare values on the row to the ones on the target row

if(identical(values[[i, j]], targetvalue[[j]])) {

result[i, j] <- TRUE # If rows match

}

else {

result[i, j] <- FALSE # If rows don't match

}

}

}

print(result)

result

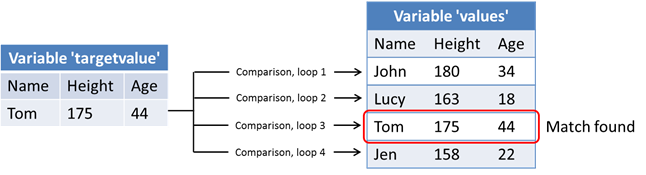

}During the initial steps of the program, the tables stored in files datafile.txt and datafile2.txt will be read and stored the variables values and targetvalue respectively. The targetvalue variable will contain one row of data and the values variable will contain four rows of data with a similar structure.

The computational part consists of comparing the values of the rows stored in the values variable with the row stored in the targetvalue variable. Each line is compared during a separate iteration of the outermost for-loop. A graphical illustration of the data is shown in the image below.

for-loop iterations. A matching row will be found during the 3rd iteration.The result of the comparison will be stored in the result-matrix, which will contain a row of FALSE values for rows that did not match. The matching row will be marked with TRUE values.

The locally executable program can be executed by changing your current working directory in R to the directory containing the material for this example and executing the command shown below:

source("local_function.r")

result <- local_function()3.2.2. The cloudfor version

The cloudfor version of the locally executable program is shown below. Line numbers have been added.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_2_transferring_data_files

# Copyright Techila Technologies Ltd.

library(techila)

run_datafiles <- function() {

# This function contains the distributed version, where operations inside the

# loop structure will be executed on Workers.

#

# Usage:

#

# result <- run_datafiles()

# Read values from the data file

values <- read.table("datafile.txt", header=TRUE, as.is=TRUE)

# Determine the number of rows and columns of data

rows <- nrow(values)

cols <- ncol(values)

# Create empty matrix for results that will be generated in one Job

result <- matrix(rep(NA, 3), 1, 3)

# Split the data read from file 'datafile.txt' to multiple files.

# These files will be stored i the Job Input Bundle.

for (i in 1:rows) {

data <- values[i,]

write.table(data, file=paste("input", as.character(i), sep=""))

}

# Create a list of the files generated earlier.

inputlist <- as.list(dir(pattern="^input_*"))

result <- cloudfor(i=1:rows,

.steps=1, # One iteration per Job

.sdkroot="../../../..", # Path to the 'techila' folder

.datafiles=list("datafile2.txt"), # Common data file for all Jobs

.jobinputfiles=list( # Create a Job Input Bundle

datafiles = inputlist, # List of files that will be placed in the Bundle

filenames = list("input")) # Name of the file on the Worker

) %t% { # Start of the code block that will be executed on Workers

targetvalue <- read.table("datafile2.txt", header=TRUE, as.is=TRUE)

values <- read.table("input", header=TRUE, as.is=TRUE)

# Compare the values stored in the common data file ('datafile2.txt') with

# the ones stored in teh Job-specific input file.

for (j in 1:cols) { # For each element

if(identical(values[[j]], targetvalue[[j]])) { # Compare element

result[1, j] <- TRUE # If elements match

}

else {

result[1, j] <- FALSE # If elements do not match

}

}

result # Return the 'result' variable

} # End of the code block executed on Workers

# Make result formatting match the one in the local version

result <- matrix(unlist(result), rows, cols, byrow=TRUE)

# Display result

print(result)

result

}The code starts by loading the techila package, making the functions in the package available.

After loading the package, the code will load the table in file datafile1.txt, store the values to the values variable and determine the number of columns and rows in the table. An empty result array will also be created, which will be used to store row comparison results on the Techila Worker.

Before creating a Project, the code will execute an additional local for-loop. This loop will be used to create four (4) new files. Each file will contain one row of data extracted from the file datafile1.txt.The first row will be stored in a file called input_1, the second row in a file called input_2 and so on. These files will be used as Job-specific input files and will be transferred to the TDCE environment later in the program.

After generating the files, a list containing all file names starting with input that are located in the current working directory will be created. This list will be used later in the program to define a list of files that should be used as Job-specific input files.

The cloudfor-loop used in this example will range from one (1) to number of rows in the entire data table. The results of the computational Project will be stored in the result variable.

The number of iterations performed in each Job will be set to one (1) by using the .steps parameter. This means that the Project will contain four (4) Jobs, one Job for each row of data.

The location of the techila directory is set with the .sdkroot parameter.

The .datafiles parameter defines that datafile2.txt should be transferred to all participating Techila Workers.

Respectively, the .jobinputfiles parameter is used to transfer Job specific input files. In this example, the filenames parameter contains one list item, meaning one file will be given for each Job. File input1 will be assigned for Job 1, file input2 for Job 2 and so on. Each file will be renamed to input after it has been transferred to the Techila Worker.

After transferring these files to the Worker(s), they will be loaded using the following two lines:

targetvalue <- read.table("datafile2.txt", header=TRUE, as.is=TRUE)

values <- read.table("input", header=TRUE, as.is=TRUE)These lines will be read the contents of datafile2.txt (will be same in each Job) and input (will be different in each Job).

After reading the files, a similar element-wise comparison will be performed as in the locally executable program. The result of the comparison will be stored in variable result and returned from the Job.

After the Project has been completed, the cloudfor function will return and the results will be stored in variable result.

Creating the computational project

The computational Project can be created by executing the cloudfor version of the program. To execute the program change your current working directory in R to the directory containing the material for this example and execute the command shown below:

source("run_datafiles.r")

result<-run_datafiles()The Project will contain four (4) Jobs. Each Job will compare the row stored in the Job-specific input file with the row in the file datafile2.txt. The result of the comparison will be stored in the result-array, which will be returned from the Techila Worker.

After all Jobs have been completed, the results will be transferred to the End-Users computer. The results returned by the cloudfor-loop will be in a list form. The values in the list will the stored in a matrix, which will be identical as in the locally executable version.

3.3. Managing Streamed Results

This example illustrates how to use the Streaming and Callback function features with the cloudfor function.

The material used in this example is located in the following folder in the Techila SDK:

techila\examples\R\cloudfor\3_streaming_callback

Streaming can be enabled with the .stream control parameter using the syntax shown below:

.stream=TRUE

When Streaming is enabled, Job results will be transferred from the Techila Server as soon as they are available. The results returned from the Techila Server will be stored at the correct indices by using an index value which will be automatically included with each returned result file.

Callback functions can be enabled with the .callback control parameter using the syntax shown below:

.callback="<callback function name>"

The notation <callback function name> would be replaced with the name of the function you wish to use. For example, the following syntax would call a function called cbFun for each Job result.

.callback="cbFun"

The callback function will receive one (1) input argument, which will contain the value returned from the Techila Worker Code.

Please note that the callback function will be called immediately each time after a new Job result has been received. This means when using Streaming, the call order is not the same as when running a similar loop structure locally. The results returned from the callback function will be placed at the correct indices by cloudfor function.

3.3.1. Locally executable program

The source code of the locally executable program (located in the file local_function.r) used in this example is shown below.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_3_streaming_callback

# Copyright Techila Technologies Ltd.

multiply <- function(a,b){

# Function containing simple arithmetic operation.

a * 10 + b

}

local_function <- function() {

# This function will be executed locally on your computer and will not

# communicate with the Techila environment.

#

# Usage:

#

# result <- local_function()

# Create empty matrix for results

result <- matrix(0, 2, 3)

print("Results generated during loop evaluations:")

for (i in 1:3) {

for (j in 1:2) {

# Pass the values of the loop counters to the 'multiply' function and

# store result in the 'result' matrix

result[j, i] <- multiply(j, i)

print(result[j, i]) # Display value returned by the 'multiply' function

}

}

print("Content of the 'result' matrix:")

print(result)

result

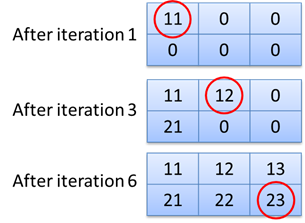

}The function called local_function contains two perfectly nested for for-loops. The innermost for-loop will call the multiply function, which will perform some simple arithmetic operations using the loop counter values i and j as input arguments. The result of the operation will be stored in the result-matrix at the indices corresponding to the values of the loop counters i and j. The value of the operation will also be printed each iteration. The content and values stored in the result-matrix are illustrated in the image below.

i and j. The value generated during the first iteration (loop counters: i=1,j=1) will be stored at indices (1,1). The value generated during iteration 3 will be stored at indices i=2, j=1 and the last value at indices i=3, j=2.3.3.2. The cloudfor version

The cloudfor version of the locally executable program is shown below.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_3_streaming_callback

# Copyright Techila Technologies Ltd.

library(techila)

cbfun <- function(job_result) {

# Callback function. Will be called once for each result streamed from the

# Techila Server.

print(paste("Job result: ", job_result)) # Display the result

job_result # Return the result

}

multiply <- function(a, b){

# Function containing simple arithmetic operation. Will be automatically

# made available on the Workers

a * 10 + b

}

run_streaming <- function() {

# Function for creating the computational Project.

project_result <- cloudfor (i = 1:3) %to% # Outer cloudfor loop

cloudfor (j = 1:2,

.steps=1, # One iteration per Job

.callback="cbfun", # Pass each returned result to function 'cbfun'

.stream=TRUE, # Enable streaming

.sdkroot="../../../.." # Path to the 'techila' directory

) %t% { # Start of code block that will be executed on Workers

multiply(j, i) # This operation will be performed on Workers

} # End of code block executed on Workers

# After Project has been completed, display results

print("Content of the reshaped 'result' matrix:")

print(project_result)

project_result

}The cloudfor version contains three functions:

-

run_streaming

-

cbfun

-

multiply

The run_streaming function contains the cloudfor-loops, which have been used to replace the normal for-loops in the locally executable program. In addition, control parameters have been used to enable the result streaming and for defining the name of the callback function.

.stream=TRUE

The parameter shown above will enable individual Job results to be streamed from the Techila Server as soon as they are available. When streaming has been enabled, individual Job results will be returned from the Techila Server in the order the Jobs are completed. This means that the results will be returned in no specific order. The effect of this is illustrated by the callback function, which will display the results in the order in which they will be received from the Techila Server.

.callback="cbfun"

The parameter above defines the function cbfun as the callback function, meaning this function will be used to process each of streamed Job results. In this example, the function will only print the content of the job_result variable, which will contain the value returned from each Job. The values printed during the callback function will most likely be in a different order than in the locally executable version. These results will be automatically reshaped to a 2x3 matrix (same as in the locally executable version) after all results have been received from the Techila Server.

The multiply function is identical as in the locally executable version and contains simple arithmetic operations that use the values of the loop counters as input arguments. This function call is inside the innermost cloudfor-loop, meaning the function will be executed on the Techila Workers.

3.3.3. Creating the computational project

The computational Project can be created by executing the cloudfor version of the program. To execute the program, change your current working directory in R to the directory containing the material for this example and execute the command shown below:

source("run_streaming.r")

result <- run_streaming()The Project will contain six (6) Jobs. In each of the computational Jobs, the multiply function will be called with different input arguments. The combinations of the input arguments will be identical as in the locally executable program, meaning the operations performed in the computational Jobs will correspond to the operations performed in the locally executable program.

Individual Job results will be streamed in the order they are completed and will be automatically processed by the callback function. After all results have been completed, the results will be reshaped and the matrix containing the results will be printed.

3.4. Active Directory Impersonation

The walkthrough in this Chapter is intended to provide an introduction on how to use Active Directory (AD) impersonation. Using AD impersonation will allow you to execute code on the Techila Workers so that the entire code is executed using your own AD user account.

The material discussed in this example is located in the following folder in the Techila SDK:

techila\examples\R\cloudfor\ad_impersonate

Note! Using AD impersonation requires that the Techila Workers are configured to use an AD account and that the AD account has been configured correctly. These configurations can only be done by persons with administrative permissions to the computing environment.

More general information about this feature can be found in Introduction to Techila Distributed Computing Enginedocument.

Please consult your local Techila Administrator for information about whether or not AD impersonation can be used in your TDCE environment.

AD impersonation is enabled by setting the following Project parameter:

.ProjectParameters = list("techila_ad_impersonate" = "true")

This control parameter will add the techila_ad_impersonate Project parameter to the Project.

When AD impersonation is enabled, the entire computational process will be executed under the user’s own AD account.

3.4.1. Example material walkthrough

The source code of the example discussed here can be found in the following file in the Techila SDK:

techila\examples\R\Features\cloudfor\run_impersonate.r

The code used in this example is also illustrated below for convenience.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_ad_impersonate

run_impersonate <- function() {

# This function contains the cloudfor-loop, which will be used to distribute

# computations to the Techila environment.

#

# During the computational Project, Active Directory impersonation will be

# used to run the Job under the End-User's own AD user account.

#

# Syntax:

#

# source("run_impersonate.r")

# res <- run_impersonate()

# Copyright Techila Technologies Ltd.

# Load the techila package

library(techila)

# Check which user account is used locally

local_username <- system("whoami",intern=TRUE)

worker_username <- cloudfor (i=1:1, # Set the maximum number of iterations to one

.sdkroot="../../../..", # Location of the Techila SDK 'techila' directory

.ProjectParameters = list("techila_ad_impersonate" = "true") # Enable AD impersonation

) %t% {

# Check which user account is used to run the computational Job.

worker_username <- system("whoami",intern=TRUE)

}

# Print and return the results

cat("Username on local computer:",local_username, "\n")

cat("Username on Worker computer:",worker_username, "\n")

list(local_username,worker_username)

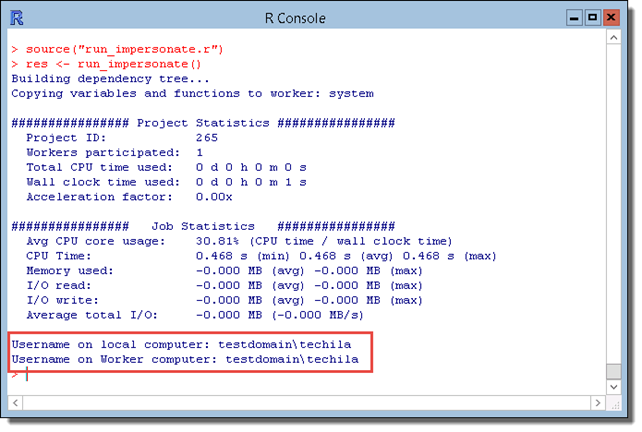

}The code starts by executing the operating system command whoami, which displays the current domain and user name. This command will be executed on the End-User’s computer, meaning the command will return the End-User’s own AD user name. The user name will be stored in the local_username variable.

The cloudfor-loop used in this example will create a computational Project, which will consist of one Job.

AD impersonation is enabled by using the Project parameter techila_ad_impersonate. With this parameter enabled, the entire computational process will be executed using the End-User’s own AD user account.

The whoami command is then used to get the identity of the Job’s owner on the Techila Worker. Because AD impersonation has been enabled, this command should return the End-User’s AD user name and domain. If AD impersonation would be disabled (e.g. by removing the techila_ad_impersonate Project parameter), this command would return the Techila Worker’s AD user name.

After the Project has been completed, information about which AD user account was used locally and during the computational Job will be displayed.

3.4.2. Creating the Project

To create the computational Project, change your current working directory in your R environment to the directory that contains the example material relevant to this example.

After having browsed to the correct directory, you can source the Local Control Code using command:

source("run_impersonate.r")

After having sourced the file, create the computational Project using command:

res <- run_impersonate()

After the Project has been completed, information about the AD user accounts will be displayed. Please note that the output generated by the program will change based your domain and AD account user names. The example screenshot below illustrates the program output when the End-User’s own AD account name is techila and the domain name is testdomain.

3.5. Using Semaphores

The walkthrough in this Chapter is intended to provide an introduction on how to create Project-specific semaphores, which can be used to limit the number of simultaneous operations.

The material discussed in this example is located in the following folder in the Techila SDK:

techila\examples\R\cloudfor\semaphores

More general information about this feature can be found in "Introduction to Techila Distributed Computing Engine" document.

Semaphores can be used to limit the number of simultaneous operations performed in a Project. There are two different types of semaphores:

-

Project-specific semaphores

-

Global semaphores

Project-specific semaphores will need to be created in the code that is executed on the End-User’s computer. Respectively, in order to limit the number of simultaneous processes, the semaphore tokens will need to be reserved in the code executed on the Techila Workers. Global semaphores can only be created by Techila Administrators.

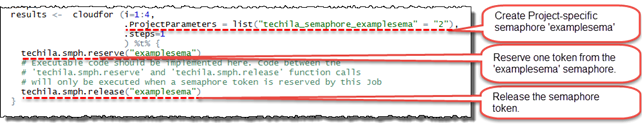

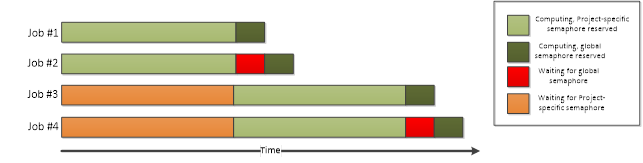

The example figure below illustrates how to use Project-specific semaphores. The semaphore is created by using a Project parameter with a techila_semaphore_ prefix followed by the name of the semaphore. The parameter used in the example will create a Project-specific semaphore called examplesema and with two semaphore tokens.

The functions used for reserving and releasing the semaphore tokens are intended to be executed on the Techila Worker. If these functions are executed on the End-User’s computer, they will generate an error, because these functions are not defined and the End-User’s computer does not have all the necessary TDCE components. For this reason, the .steps control parameter must be used to prevent the code from being executed locally on the End-User’s computer.

The semaphore token will be reserved by the techila.smph.reserve("examplesema") function call. This function call will return when the semaphore token has been reserved from the Project-specific semaphore called examplesema. If no tokens are available, the function will wait until a token becomes available.

The semaphore token will be released by the techila.smph.release("examplesema")function call.

Creating semaphores

As illustrated in the figure above, Project-specific semaphores are created by adding a Project parameter. The following syntaxes can be used when defining the Project parameter:

list("techila_semaphore_<name>" = "size")

list("techila_semaphore_<name>" = "size,expiration")The list("techila_semaphore_<name>" = "size") syntax creates a Project-specific semaphore with the defined <name> and sets the maximum number of tokens to match the value defined in size. The semaphore tokens will not have an expiration time, meaning the tokens can be reserved indefinitely.

For example, the following syntax could be used to create a semaphore with the name examplesema, which would have 10 tokens. This means that a maximum of 10 tokens can be reserved at any given time.

.ProjectParameters = list("techila_semaphore_examplesema" = "10")

The list("techila_semaphore_<name>" = "size,expiration") syntax defines the <name> and size of the semaphore similarly as the earlier syntax shown above. In addition, this syntax can be used to define an expiration time for the token by using the expiration argument. If a Job reserves a semaphore token for a longer time period (in seconds) than the one defined in the expiration argument, the Project-specific semaphore token will be automatically released and made available for other Jobs in the Project. The process that exceeded the expiration time will be allowed to continue normally.

For example, the following syntax could be used to define a 15 minute (900 second) expiration time for each reserved token.

.ProjectParameters = list("techila_semaphore_examplesema" = "10,900")

Reserving semaphores

As illustrated earlier in the image above, semaphore tokens are reserved by using the techila.smph.reserve function:

techila.smph.reserve(name, isglobal = FALSE, timeout = -1, ignoreerror = FALSE)

When a semaphore token is successfully reserved, the techila.smph.reserve function will, by default, return the value TRUE. Respectively, if there was a problem in the semaphore token reservation process, this function will, by default, generate an error. This behaviour can be modified with the ignoreerror argument as explained later in this Chapter.

The only mandatory argument is the name argument, which is used to define which semaphore should be used. The remaining arguments isglobal, timeout and ignoreerror are optional and can be used to modify the behaviour of the semaphore reservation process. The usage of these arguments is illustrated with example syntaxes below.

techila.smph.reserve(name) will reserve one token from the semaphore, which has the same name as defined with the name input argument. This syntax can only be used to reserve tokens from Project-specific semaphores.

For example, the following syntax could be used to reserve one token from a semaphore named examplesema for the duration of the with-block.

techila.smph.reserve("examplesema")

techila.smph.reserve(name, isglobal=TRUE) an be used to reserve one token from a global semaphore with a matching name as the one defined with the name argument. When isglobal is set to TRUE, it defines that the semaphore is global. Respectively, when the value is set to FALSE, it defines that the semaphore is Project-specific.

For example, the following syntax could be used to reserve one token from a global semaphore called globalsema.

techila.smph.reserve("globalsema", isglobal=TRUE)

techila.smph.reserve(name, timeout=10) can be used to reserve a token from a Project-specific semaphore (or a global semaphore if the syntax defines isglobal=TRUE), which has the same name as defined with the name input argument. In addition, this syntax defines a value for the timeout argument, which is used to define a timeout period (in seconds) for the reservation process. When a timeout period is defined, a timer is started when the constructor requests a semaphore token. If no semaphore token can be reserved within the specified time window, the Job will be terminated and the Job will generate an error. If needed, setting the value of the timeout parameter to -1 can be used to disable the effect of the timeout argument.

For example, the following syntax could be used to reserve one token from Project-specific semaphore called projectsema. The syntax also defines a 10 second timeout value for token. This means that the command will wait for a maximum of 10 seconds for a semaphore token to become available. If no token is available after 10 seconds, the code will generate an error, which will cause the Job to be terminated.

techila.smph.reserve("examplesema", timeout=10)

techila.smph.reserve("examplesema", isglobal=TRUE, timeout=10, ignoreError=TRUE) can be used to define the name, isglobal and timeout arguments in a similar manner as explained earlier. In addition, the ignoreerror argument is used to define that problems during the semaphore token reservation process should be ignore.

If the ignoreerror argument is set to TRUE and there is a problem with the semaphore reservation process, the techila.smph.reserve function will return the value FALSE (instead of generating an error) and the code is allowed to continue normally. If needed, setting ignoreerror to FALSE can be used to disable the effect of this parameter.

The example code snippet below illustrates how to reserve a global semaphore token called globalsema. If the semaphore is reserved successfully, the operations inside the if(reservedok) statement are processed. If no semaphore token could be reserved, code inside the if(!reservedok) statement will be processed.

reservedok=techila.smph.reserve("globalsema",isglobal=TRUE,ignoreerror=TRUE)

if (reservedok) {

Execute this code block if the semaphore token was reserved

successfully.

techila.smph.release("globalsema",isglobal=TRUE)

}

else if (!reservedok) {

Execute this code block if there was a problem with the

reservation process.

}Releasing semaphores

As mentioned earlier and illustrated by the above code sample, each semaphore token that was reserved with a techila.smph.reserve function call must be released by using the techila.smph.release function:

techila.smph.release(name, isglobal = FALSE)

The effect of the input arguments is explained below using example syntaxes:

The techila.smph.release(name) syntax can be used to release a semaphore token belonging to a Project-specific semaphore with name specified in name. This function cannot be used to release a token belonging to a global semaphore. The example syntax shown below could be used to release a token belonging to a Project-specific semaphore called examplesema.

techila.smph.release("examplesema")

If you want to release a semaphore token belonging to a global semaphore, this can be done by setting the value of the isglobal argument to TRUE.

For example, the following syntax could be used to release a token belonging to a global semaphore called globalsema.

techila.smph.release("globalsema", isglobal=TRUE )

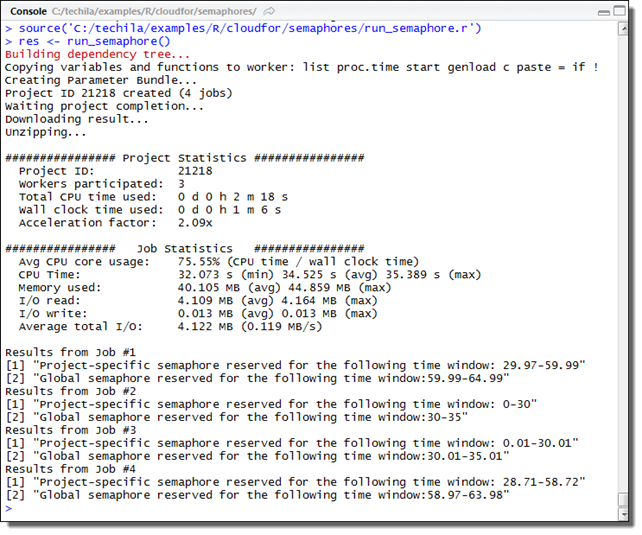

3.5.1. Example material walkthrough

The source code of the example discussed here can be found in the following file in the Techila SDK:

techila\examples\R\Features\cloudfor\run_semaphore.r

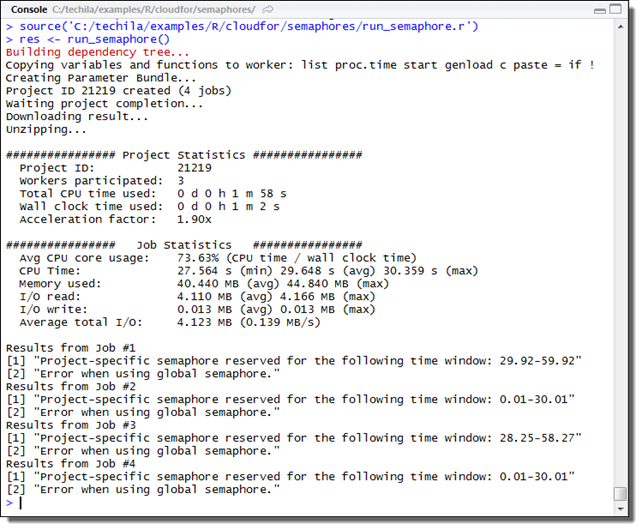

The code used in this example is also illustrated below for convenience.

# Example documentation: http://www.techilatechnologies.com/help/r_cloudfor_semaphores

run_semaphore <- function() {

# This function contains the cloudfor-loop, which will be used to distribute

# computations to the Techila environment.

#

# During the computational Project, semaphores will be used to limit the number

# of simultaneous operations in the Project.

#

# Syntax:

#

# result = run_semaphore()

# Copyright Techila Technologies Ltd.

# Load the techila package

library(techila)

# Set the number of loops to four

loops <- 4

results <- cloudfor (i=1:loops, # Loop contains four iterations

.ProjectParameters = list("techila_semaphore_examplesema" = "2"), # Create Project-specific semaphore named 'examplesema', which will have two tokens.

.sdkroot="../../../..", # Location of the Techila SDK 'techila' directory

.steps=1 # Perform one iteration per Job

) %t% {

result <- list()

# Get current timestamp. This marks the start time of the Job.

jobStart <- proc.time()[3]

# Reserve one token from the Project-specific semaphore

techila.smph.reserve("examplesema")

# Get current timestamp. This marks the time when the semaphore token was reserved.

tstart <- proc.time()[3]

# Generate CPU load for 30 seconds.

genload(30)

# Calculate a time window during which CPU load was generated.

twindowstart <- tstart - jobStart

twindowend <- proc.time()[3] - jobStart

# Build a result string, which includes the time window

result <- c(result, paste("Project-specific semaphore reserved for the following time window: ", twindowstart, "-", twindowend, sep=""))

# Release the token from the Project-specific semaphore 'examplesema'

techila.smph.release("examplesema");

# Attempt to reserve a token from a global semaphore named 'globalsema'

reservedok = techila.smph.reserve("globalsema", isglobal=TRUE, ignoreerror=TRUE)

if (reservedok) { # This code block will be executed if the semaphore was reserved successfully.

start2 = proc.time()[3]

genload(5)

twindowstart = start2 - jobStart

twindowend = proc.time()[3] - jobStart

techila.smph.release("globalsema",isglobal=TRUE)

result <- c(result,paste("Global semaphore reserved for the following time window:", twindowstart,"-", twindowend,sep="")) }

else if (!reservedok) { # This code block will be executed if there was a problem in reserving the semaphore.

result <- c(result,"Error when using global semaphore.")

}

result

}

results

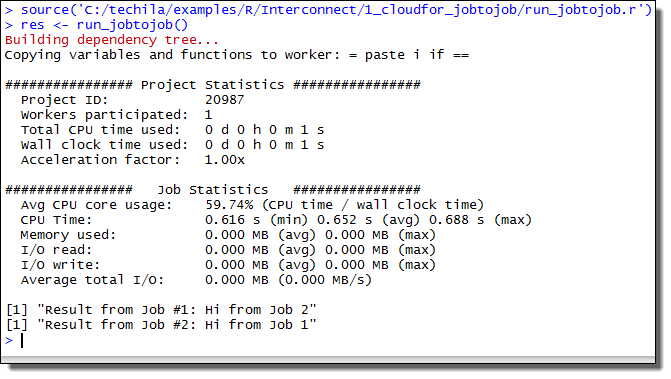

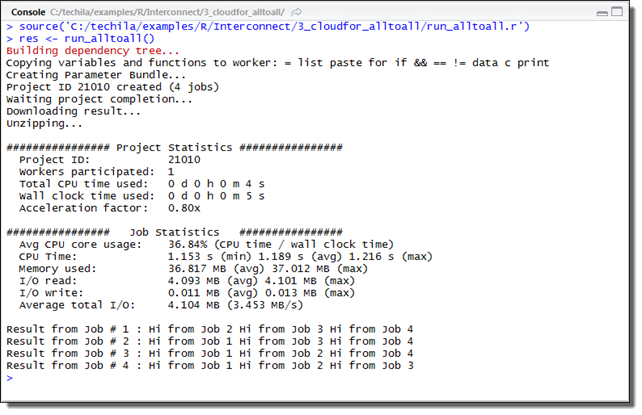

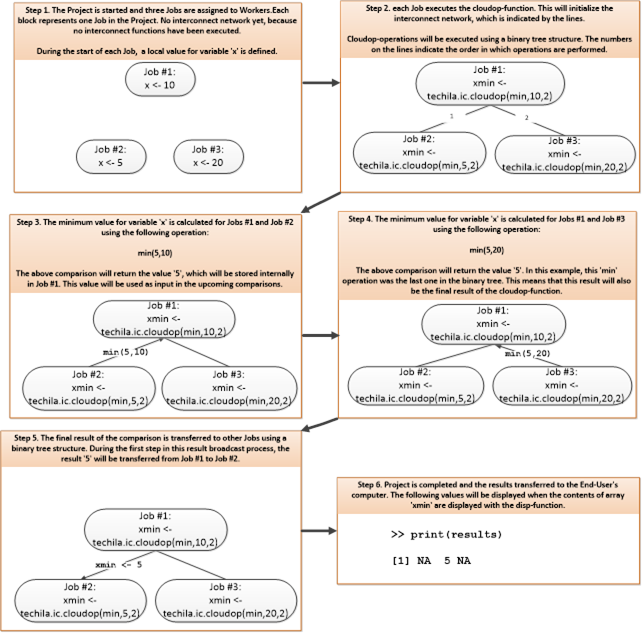

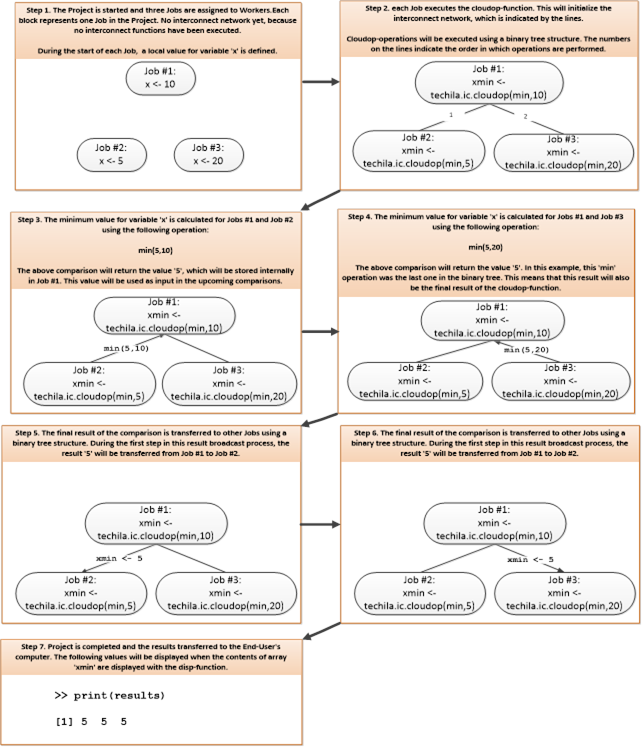

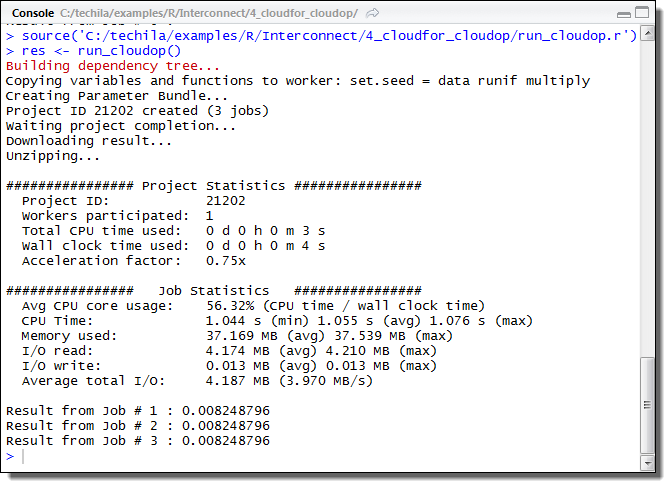

for (x in 1:length(results)) {